Magnitude Notation

By relieving the brain of all unnecessary work, a good notation sets it free to concentrate on more advanced problems, and in effect increases the mental power of the [human] race.

A.N. Whitehead (via Ken Iverson)

The modern number technology is scientific notation, which is unnecessarily complicated. It’s difficult to type and speak and read, and it’s easily deformed by a simple cut-and-paste [6.022×1023 from my clipboard just now]. Even its name is a seven-syllable mouthful.

But most importantly:

Scientific Notation buries the lede

For instance, you may have had to memorize Avogadro’s Number in high school chemistry:

6.022 × 1023

which is pronounced “six point oh two two times tentatha twenty-third”.

Now, this “number” actually has several numbers within it. Which of these numbers–the 6.022, the 10, or the 23–is the most important?

It’s the “23”. The magnitude of the number. How long is the number, how many digits does it have? This is why a cheeky chemistry teacher would laboriously write this out on the whiteboard:

602,200,000,000,000,000,000,000

Every other component of the number is less important. Far less important. Even the first digit, the “6” in this case, is 1023 times less important than the “23”. But the magnitude is tucked away at the very end in a smaller font, like a footnote.

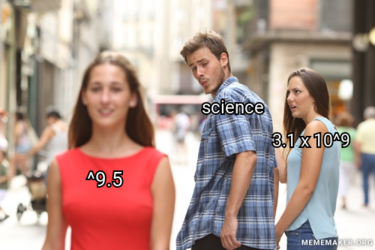

This is the crux of the problem. Scientific notation is perfectly functional as a general solution for precise numeric computation, but design-wise for humans it is backwards. The most important number should come first.

On top of that, scientific notation is complicated to do actual math with; arithmetic in scientific notation requires dealing with the exponents and coefficients separately.

Mag Numbers

Within the current system of scientific notation, we could ditch the coefficient and just use the exponent: 1023 (or “tentatha 23”), which removes a lot of the clutter. Then if we do want some more precision, we don’t actually need a separate coefficient, we can just use a fractional exponent:

1023.77974

In computer-land, you can almost imagine “1e23.77974” being suitable in any computer language that allows the “6.022e23” notation.

And how many sig figs do you actually need as a layperson or even as an undergraduate? In most cases we can round up to 1023.8 or even 1024–and this is a lot closer to the actual number than if you misremember it as 6.023 × 1022 (see the difference?).

To simplify the notation even further, we don’t exactly need to specify base 10, since it’s all but exclusively used when specifying actual quantities: ^23.8

We need to use a symbol to differentiate mag numbers from real numbers. The ^ (caret) is a common ASCII notation for exponentiation, used in many languages, and available on every keyboard.

In print or more formal writing, it might be nice to use a character like the up-arrow (↑, which jives with Knuth up-arrow notation): ↑23.8

How to pronounce ^24 or ↑24

I say “mag 24”, short for magnitude, because it sounds like the inverse of log10, which it is. People seem to intuitively get it, and it sounds a little mathpunk.

If you’re going to a scientific conference, you can always code switch and use “tentatha”.

What about really small numbers?

Negative magnitudes extend Mag World into the realms of the extremely small. Mag notation can’t represent 0 exactly, but it can get arbitrarily close. (If you need zero, you don’t need mag notation; just write “0”.)

For example, a nanometer is 10 − 9 meters or ↑-9 length.

We could pronounce this “mag negative nine” but that’s an awkward mouthful. So we can abbreviate it to “mag neg 9” or even “neg 9” (perhaps short for “negligible”).

Metric prefixes

The metric system has verbal prefixes for 24 of the exponents. For example, the “nano-” in nanometer above means ↑-9.

You should memorize these metric prefixes if you don’t know them already:

- ↑-12: pico- (trillionth)

- ↑-9: nano- (billionth)

- ↑-6: micro- (millionth)

- ↑-3: milli- (thousandth)

- ↑3: kilo- (thousand)

- ↑6: mega- (million)

- ↑9: giga- (billion)

- ↑12: tera- (trillion)

Here is the full list of metric prefixes.